Risk Assessment of Essential Product Requirements: Prerequisites

Episode 63: Better Built By Burkhard

Dear Reader,

My talk Building an EU CRA Compliant Operator Terminal was accepted for the Embedded Software Engineering (ESE) Congress (1-5 December 2025 in Sindelfingen). As I must provide an abridged written version for the conference proceedings by 12 October 2025, I had the idea to do this in one newsletter.

I quickly realised that the scope for the original title would be far too big and that I should focus on the most important and most difficult part of EU CRA compliance: the risk assessment of the essential product requirements (Annex I, Part I). When I reached the 2000-word mark, it was clear that I had to split up the newsletter into three: Risk Assessment of Essential Product Requirements - Prerequisites, Process and Documentation.

Besides reading and writing about the EU CRA, I also managed to write two smaller technical posts.

Running Wayland Clients as Non-Root Users. Far too many embedded Linux systems run Qt applications with root privileges so that a Wayland compositor like Weston can display them. This violates the cybersecurity principle of least privilege - and hence the EU Cyber Resilience Act (CRA). I'll show you how neither the Wayland compositor nor its clients (the Qt applications) must run as root - and how to make your system a little bit more secure.

DISTRO_FEATURES:append After DISTRO_FEATURES:remove Has No Effect. The Yocto build always executes all

remove’s after allappend’s for a variable. Hence, you can never re-append an item if you removed it in any metadata file. It’s gone forever. Fortunately, there is a trick how to get a removed item back into the variable. As so often in programming, it just takes another indirection.

Enjoy reading,

Burkhard 💜

Risk Assessment of Essential Product Requirements: Prerequisites

Legal Context

For the purpose of complying with [essential cybersecurity requirements set out in Part I of Annex I], manufacturers shall undertake an assessment of the cybersecurity risks associated with a product with digital elements and take the outcome of that assessment into account during the planning, design, development, production, delivery and maintenance phases of the product with digital elements with a view to minimising cybersecurity risks, preventing incidents and minimising their impact, including in relation to the health and safety of users.

EU CRA, Article 13(2) (emphasis mine)

Article 13(2) mandates a risk assessment for the essential requirements related to product properties of Annex I, Part I. Manufacturers must keep the risk assessment up-to-date during the whole lifetime of the product. They must implement concrete security measures to minimise cybersecurity risks and their damage. Therefore, producing lots of documents and presenting them as the risk assessment won't be enough.

The risk assessment is a crucial part of the conformity assessment for default products (Annex VIII, Part I, §2), important products (Annex VIII, Part I, §2) and critical products (Annex VIII, Part IV, §3.1(b)). The more critical a product is the harder manufacturers must try to minimise the risks and the damage caused by them.

An embedded device fails the conformity assessment of the EU CRA, if the violation of any essential product requirement poses a significant cybersecurity risk. By implementing appropriate mitigation measures, manufacturers must reduce the risk to an acceptable non-significant level. Satisfying the essential product requirements is a necessary but not sufficient condition for conformity. At least, it means that manufacturers are done with the most difficult and time-consuming part of the conformity assessment.

Will manufacturers get any help in the form of harmonised standards from the regulator? No for default products. Yes by 30 August 2026 for important and critical products. See the summary in my previous newsletter. You can imagine a harmonised standard as a huge list of criteria. If a product satisfies all criteria, it passes the conformity assessment of the EU CRA. This is called presumption of conformity.

In short, manufacturers of default products - that is, most manufacturers - will have to work out a risk assessment on their own. And - manufacturers of important and critical products shouldn’t wait another year for a harmonised standard. Everyone should start now!

Important Terms

Vulnerabilities

(40) ‘vulnerability’ means a weakness, susceptibility or flaw of a product with digital elements that can be exploited by a cyber threat;

(41) ‘exploitable vulnerability’ means a vulnerability that has the potential to be effectively used by an adversary under practical operational conditions;

EU CRA, Article 3(40,41)

According to the essential product requirement §2a, the ultimate goal of the risk assessment is to demonstrate that the scrutinised device has no known exploitable vulnerabilities. One exploitable vulnerability renders a device non-compliant with the EU CRA. Manufacturers need not eliminate vulnerabilities fully, which might be impossible or too expensive. Manufacturers must only put up enough obstacles so that the exploitation of vulnerabilities becomes too costly or too time-consuming.

Here are some typical examples of vulnerabilities for embedded devices.

The manufacturer’s support team can log in to all devices as root with ssh.

All applications run with root privileges.

Most components of the Linux system have reached end of life three years ago.

Assets

A vulnerability makes it easier for good or bad actors to damage the assets of a device accidentally or intentionally, respectively. Such a damage reduces the value generated by the device and by all the systems connected directly or indirectly to the device: the whole ecosystem of the device.

The driver terminal of a harvester has the following assets and some more.

Availability. A harvester that stands still is losing money.

Sensitive personal information like the farmers’ contact details or the locations of the fields.

A trailer with a tractor autonomously drives alongside the harvester to pick up the grain. If the communication between harvester and tractor is disrupted, grain may end up in the field or the next tractor with trailer won’t be available in time.

If the rear-view camera fails, the harvester may run over a human in the blindspot.

The harvester itself. The theft of a harvester means a loss of a quarter million Euros and more.

Cybersecurity Threats and Threat Actors

The official definition of cyber threat corroborates that manufacturers should look at the ecosystem of a device and not just at the device itself.

(8) ‘cyber threat’ means any potential circumstance, event or action that could damage, disrupt or otherwise adversely impact network and information systems, the users of such systems and other persons;

Regulation (EU) 2019/881, Article 2(8) via EU CRA, Article 3(46)

The annual report ENISA Threat Landscape 2024 (PDF) lists seven prime threats against information systems and explains them in detail: ransomware, malware, social engineering, threats against data (data breaches and leaks), threats against availability (DoS = Denial of Service), information manipulation, supply chain attacks. All these threats are relevant for embedded devices, too.

ENISA has another report Foresight Cybersecurity Threats for 2030 (PDF) that forecasts the following top threats for 2030: supply chain compromise of software dependencies, skill shortage, human error combined with exploiting legacy systems within the ecosystem, and exploitation of unpatched and out-of-date systems within the overwhelmed ecosystem. It’s incredible how well these threats describe the reality of developing embedded devices 😮💨

Knowing the most important threats helps manufacturers identify vulnerabilities in their ecosystem. Threats tell manufacturers to look at all direct and indirect network connections to and from the device, at all hardware ports (USB, Debug, Serial, I2C, SPI, CAN, etc.), at all users having access to the device, at all commercial and free open-source software components installed on the device, at all stakeholders including developers, managers and suppliers, at the CI/CD pipeline, at external and internal source code repositories, etc.

Threat actors exploit vulnerabilities and turn a threat into real damage. ENISA identifies several thread actors in their Threat Landscape 2024 (PDF):

Well-funded state-nexus actors are in for the long haul and may plant exploits in devices months or years before the actual attack. Well-known attacks are the NotPetya worm by Russia, the xz backdoor by China and the Stuxnet worm by the USA and Israel.

Cyber criminals and hackers-for-hire are motivated by quick money.

Private sector offensive actors develop and sell zero-day exploits, malicious software and hacking devices to cyber criminals. Yes, there is a sizeable market for that!

Hacktivists - hacker activists - are motivated by ideology. Environmental hacktivists might target gas- or coal-fired power plants.

Insider actors include disgruntled former or current employees and well-meaning employees, who create a CrowdStrike-sized disaster by sloppy programming.

Cybersecurity Risks

Knowing the enemies and the threats lets manufacturers gauge the risk for their devices better.

(37) ‘cybersecurity risk’ means the potential for loss or disruption caused by an incident and is to be expressed as a combination of the magnitude of such loss or disruption and the likelihood of occurrence of the incident;

(38) ‘significant cybersecurity risk’ means a cybersecurity risk which, based on its technical characteristics, can be assumed to have a high likelihood of an incident that could lead to a severe negative impact, including by causing considerable material or non-material loss or disruption;

EU CRA, Article 3(37,38) (emphasis mine)

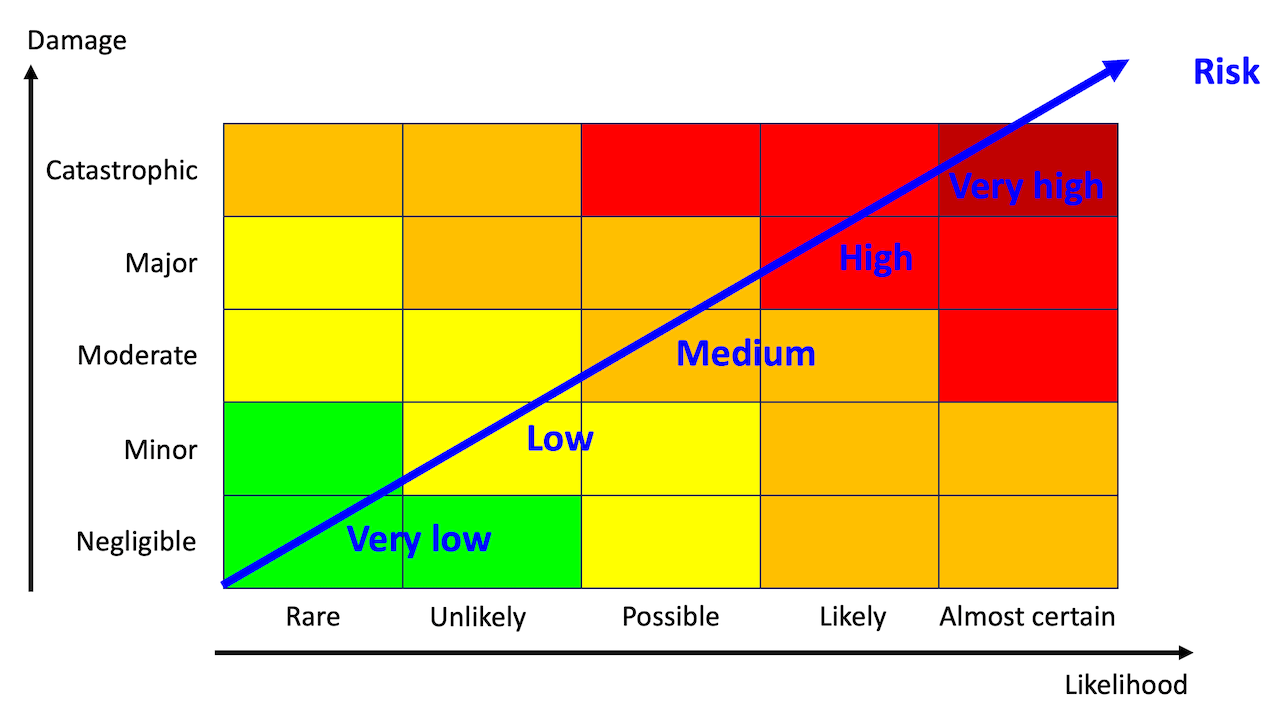

Risk is measured by combining the likelihood that a threat actor exploits a vulnerability and the damage caused by the exploitation of the vulnerability. We will estimate the likelihood and the damage separately and combine the result into a risk. The risk assessment matrix from Lean Six Sigma helps us.

Let us evaluate the risk for one of the vulnerabilities above: The manufacturer’s support team can log in to all devices as root with ssh. Once attackers gain root access to the device, they can do everything on the device. They could, for example, install ransomware on the terminal, turn the harvester into an unusable chunk of metal, and extort money from the manufacturer and its customers. The manufacturer would violate most of the essential product requirements and face serious penalties from the EU CRA. In short, the damage would be catastrophic.

How likely is an attack? As I argued in a previous newsletter, hacking cars or stealing cars is far too easy. This is a sad fact, although the car industry has been subject to cybersecurity regulations for many years (that’s why it is excluded from the EU CRA). In contrast, the EU CRA is the first time that the agricultural industry and many other industries must seriously do something about cybersecurity. Many manufacturers lack the skills, which is unlikely to change in the near future. This makes these manufacturers especially vulnerable.

These manufacturers may argue that they are too small or too unimportant as target for hackers. As their defences are weak or non-existent, these harvesters are easy targets even for less skilled hacker. Grounding a sizeable number of machines during the harvest could be pretty lucrative - and cause a panic in the public. I would definitely rank it as possible - if not likely.

If we look up the likelihood “possible” and the damage “catastrophic” in the risk assessment matrix, we get “high” as the resulting risk. Without doubt, we are looking at a “severe cybersecurity risk” and hence at an exploitable vulnerability, which requires mitigation.

Very good article.

Note that this looks like what the FDA requires from medical device manufacturer: Cybersecurity in Medical Devices: Quality System Considerations and Content of Premarket Submissions

Really nice overview as always, Burkhard! I think that threat modeling and risk assessment are quite possibly the _most_ important elements of the CRA. How you define your threat model determines how you assess cybersecurity risks, and it's your assessment of cybersecurity risks that determines what controls or mitigations you need to apply. So a poorly done threat model or risk assessment can add serious cost and complexity to the final built product--we all know that bolting on security features after the fact is 10x as expensive and 1/10th as effective as building security in from the start.

One thing I'll add on that topic is that cybersecurity risk management isn't so different from risk management in other domains, at least at a high level. The four basic tools are the same: Avoid, Reduce, Transfer, Accept. There are really just two main differences: first, we usually refer to risk reduction strategies as "mitigations" or "controls", and the line between avoiding risk and reducing risk is slightly fuzzier. And second, regulations like the CRA make it much more difficult to just accept cybersecurity risks, and basically impossible to transfer them to 3rd parties.

I'd also like to mention something on the topic of vulnerabilities. Although you're of course right in your description of generic, product-wide vulnerabilities, it's worth giving special attention to CVE management, i.e. the process and strategy for dealing with documented vulnerabilities in the open-source dependencies of the product. A risk that applies to every product is "an exploitable vulnerability is discovered in one of my software dependencies"--and the only effective mitigation for that risk is to make sure an accurate, actionable SBOM for the product exists, and regularly scan it for exposure to CVEs.