I wish you all a healthy, successful and adventurous new year 2023.

All the best, Burkhard 💜

Why TDD is a Main Driver for High Performance

Autumn 2022 saw a new fashion: TDD bashing. I came across the three posts listed in the section Around the Web: TDD Bashing below and their reverberations in social media. The posts are unfounded and ignorant opinion pieces.

In 2022, we can and should discuss the effectiveness of TDD on an objective level. In the book Accelerate: Building and Scaling High Performing Technology Organizations (see also my review), Nicole Forsgren and her coauthors provide empirical evidence that applying the principles and practices of Continuous Delivery leads to less rework, higher quality, faster delivery, less team burnout and a more collaborative work culture. In short, it leads to higher-performing teams and more profit to the company.

Our research shows that technical practices [like test-driven development and continuous integration] play a vital role in achieving [higher Continuous Delivery performance].

Nicole Forsgren et al., Accelerate: Building and Scaling High Performing Technology Organizations, 2018, p. 41

This gave me the idea to judge the effectiveness of TDD by how well it meets the principles and practices of Continuous Delivery. Different approaches become comparable. For example, writing tests after the code gives developers feedback later than writing tests before the code. That’s a clear point for TDD, as positive feedback loops - getting frequent feedback fast - are essential for high-performing teams.

Let me be clear here. I do not say that using TDD and the other Continuous Delivery practices guarantee high performance. I only say that using TDD and the other practices make high performance much more likely than using only some or none of these practices. In other words, your project is much more likely to succeed if you apply the Continuous Delivery principles and practices. And TDD is a crucial driver for the success!

Let me start with Kent Beck’s original definition of TDD from 2003.

Red - Write a little test that doesn’t work, and perhaps doesn’t even compile at first.

Green - Make the test work quickly, committing whatever sins necessary in the process.

Refactor - Eliminate all of the duplication created in merely getting the test to work.

Kent Beck, Test-Driven Development - By Example, 2003

Developers go through the full red-green-refactor cycle every couple of minutes. As developers write a test first, they will get almost instant feedback whether their code works. TDD embodies the mantra of Continuous Delivery: Get frequent feedback fast. All other feedback loops of Continuous Delivery - including feedback from integration and acceptance testing, builds, integration, static code analysis, customers, users and architecture models - are slower and less frequent.

Always guided by feedback from the tests, TDD makes developers work in small steps: the first principles of Continuous Delivery. If a test fails, the developer will change or throw away a few lines of code. She looses a few minutes of work but understands the problem better. This reduces the risk of missing a deadline, because finding and fixing the issue days or weeks later takes considerably more time.

A “little test” is a test that focuses on a partial aspect of a function and not on the complete function. The developer only needs to work out a partial solution. She only keeps as much in her head as needed to move from Red to Green. She splits up the problem into manageable chunks. This divide-and-conquer approach leads to complexity reduction. The chance of solving the overall problem correctly increases.

TDD enables developers to build quality in, the second principle of Continuous Delivery. The test from the Red step is the first client of an interface. Having a test and thinking about the interface before the implementation makes it a lot easier to control and observe the behaviour of a class.

You see the opposite effect in legacy code, which has hardly any tests and comes with bad interfaces. It is hard to test, because it is very difficult to control the execution path through multiple functions and to observe what the interface functions do.

Easier to control and to observe implies easier to test. If small building blocks like functions and classes are easier to test, then big building blocks like components and applications are easier to test, too. Writing meaningful integration, acceptance and system tests becomes easier and faster. This gives developers more frequent feedback faster and increases the quality.

Your Continuous Delivery pipeline can run all these tests automatically. You can automate most or all manual tests. So, TDD helps automate repetitive tasks, the third principle of Continuous Delivery.

I would paraphrase the Refactor step as: nothing is cast in stone. If developers learn that a class interface or a component design is inadequate, they can always refactor their way to a better interface or design. If a test turns red, they know that the last change violated the expected behaviour of a class. Such a big refactoring certainly takes time, but it takes a lot less time than a “refactoring” with too few, bad or no tests.

TDD makes it cheap to experiment with ideas and to adapt the direction when new things come up. In short, TDD furthers continuous improvement, the fourth principle of Continuous Delivery.

The fifth and final principle is easy to meet for TDD. Everyone is responsible for changing the code. The numerous micro-tests created in the Red step give developers high confidence that their changes don’t break anything. Even developers hardly familiar with a piece of code can safely change it. The higher-level integration, acceptance and system tests act as an additional safety net. The high-frequency integration of trunk-based development directly into the mainline is only possible through this safety net.

My conclusion: TDD is a crucial driver for the success of Continuous Delivery, for creating high-performing teams and hence for the success of projects.

My experience bears that out. On one project (there are more!), I tried to convince a developer for 12 weeks to use TDD to develop his code. His only concession was to write some alibi tests after writing the code. It took me 6 weeks to make his code releasable. Unsurprisingly, the project missed a couple of deadlines and cost much more than expected.

When I fulfilled my contract, I left the project. I was fed up with cleaning up the mess of this developer and having to justify to management why the project was late. By their sloppy work, these developers poison the team morale. And that may be a lot worse than time and budget overruns.

Around the Web: TDD Bashing

The principles and practices of Continuous Delivery give us a framework for discussing the effectiveness of TDD on a more objective level than opinions. I am going to use this framework to rebut at least some of the “arguments” of the TDD bashers. Wisen Tanasa (@ceilfors) does an excellent job in debunking fallacies about TDD and in explaining why developers shouldn’t do TDD out of guilt.

Hillel Wayne: I have complicated feelings about TDD

Wayne’s argument rests on his distinction between “weak TDD” and “strong TDD”.

I practice “weak TDD”, which just means “writing tests before code, in short feedback cycles”. This is sometimes derogatively referred to as “test-first”. Strong TDD follows a much stricter “red-green-refactor” cycle:

Write a minimal failing test.

Write the minimum code possible to pass the test.

Refactor everything without introducing new behavior.

What annoys Wayne is the “emphasis on minimality”. He doesn’t give a reference for his definition. It certainly differs from Beck’s original definition from the beginning of my newsletter. Beck doesn’t mention minimality at all. And that’s an important difference.

Beck’s definition is pragmatic. Beck knows that developers won’t find the best solution right away. The Refactor step lets developers improve their solution, when they have a better idea and when they deem the improvement worthwhile. I can’t see much difference between Wayne’s notion of weak TDD and Beck’s original TDD definition.

I’m going to focus on a “maximalist” model of TDD:

[…]

TDD always leads to a better design.

TDD obviates other forms of design.

TDD obviates other forms of verification.

TDD cannot fail. If it causes problems, it’s because you did it wrong.

I don’t believe there are many true maximalists out there […] Most advocates are moderate in some ways and extreme in others […] But the maximalist is a good model for what the broader TDD dialog looks like.

No, this is not how the “broader TDD dialog looks like”. This is a straw man argument! In their absolute nature, these claims are all nonsense and easy to refute. I don’t know why Wayne goes on for another 1800 words to tear down this straw man only to conclude that “often TDD doesn’t make the cut” for improving your code. You can deduce anything from wrong assumptions. The research on Continuous Delivery comes to a very different and more nuanced conclusion.

I would rephrase the above bullet points as follows:

TDD enables better design and other forms of design. The Red step makes you think first about the interface and the contract defined by this interface. With the test, you clarify the expected behaviour. More often than not your first version of the interface will not be the best. No problem. You use the Refactor step to move towards a better design. You must come up with a design idea. TDD won’t! TDD only makes it easier to try out different design ideas.

I certainly don’t mind a bit of up-front design for a class, a component or even a system. I would accompany it with a walking skeleton implementing the architecturally significant requirements. Of course, I’d use TDD to implement the skeleton. That makes it easy to try out different design and architecture ideas.TDD enables other forms of validation. TDD makes your code testable. The easier testable code is the easier it is to write acceptance, integration, end-to-end, model-based and other tests. All these types of tests provide their unique value. Nevertheless, I strive to turn these higher-level tests into unit-tests, because unit tests are less effort to write and give me earlier feedback.

By the way, the Ports-and-Adapter architecture makes writing integration, acceptance and system tests a lot easier. It’s the interaction between multiple practises like automated testing (including TDD) and loosely coupled architecture that makes Continuous Delivery work best.If you apply TDD without thinking, you do it wrong. TDD is not an algorithm that magically yields the right solution when applied thoughtlessly: red-green-refactor, rinse and repeat forever. It still requires thinking which red, green or refactor step is the best one. What is best at the moment may not turn out the best in one hour, day, week or month. TDD has the tool to fix this built in: refactoring. TDD makes it cheap to change decisions.

The research on Continuous Delivery tells us that TDD is a crucial driver for success but not the only one. Other drivers are version control, automated deployment, automated testing, continuous integration, trunk-based development, loosely-coupled architecture and empowered teams. When a team exploits the positive feedback loops between these drivers, its performance will go through the roof.

Actually, the best thing would be to ignore posts like Wayne’s. Unfortunately, they are used by developers as a justification not to use TDD. It was such a developer who made me aware of this post. I had the bad luck to work with this guy for the better part of 2022. He was cheap (as a contractor) and so was his work.

Shai Almog: Why I Don’t do TDD

Almog just can’t get his head around writing tests first.

[…] for most cases, writing the code first seems more natural to me. Reviewing the coverage numbers is very helpful when writing tests and this is something I do after the fact.

Whether TDD feels unnatural to someone isn’t a good justification against it. That’s just another opinion ignoring the research. Almog prefers to write tests after he has written the code (test-after development or TAD for short). He thinks that this approach is as good as TDD.

[…] should we build our code based on our tests [TDD] or vice versa [TAD]? There are some cases where [TDD] makes sense, but not as often as one would think. […] TDD proponents will be quick to comment that a TDD project is easier to refactor since the tests give us a guarantee that we won’t have regressions. But this applies to projects with testing performed after the fact.

When you do TAD, you write untested code for hours before you write a test. You juggle the normal, corner and error cases in your head at the same time. You want to find a good solution for all these cases at once. You delay writing the test even more. Slightly exhausted, you’ll finally write some alibi tests - more out of guilt than conviction. That’s how I see people doing TAD on projects all the time.

The first problem is that you get feedback much later than you would with TDD. You get feedback only when you write the test, which is long after you wrote the code. TAD violates the principle that makes Continuous Delivery work: get frequent feedback fast.

The second problem is that you end up with lower-quality tests than with TDD. You have wrestled with the code, say, for two hours, before you write the tests. You know exactly how your code behaves. Your tests will naturally follow the code. But this not necessarily how the code is expected to behave. Writing tests first (the Red step of TDD) helps avoid this problem. Your brain is not biased by the implementation yet. It is in test mode (what are the normal, corner and error cases?), not in implementation mode.

But even with perfect coverage [unit tests from TDD] don’t test the interconnect properly. We need integration tests and they find the most terrible bugs.

[…] TDD over-emphasizes the “nice to have” unit tests, over the essential integration tests. Yes, you should have both. But I must have the integration tests. Those don’t fit as cleanly into the TDD process.

That is just a variant of Wayne’s claim above that “TDD obviates other forms of verification”. No surprise, because Almog “really liked” Wayne’s post. In contrast to TAD, TDD makes code more easily testable. This makes it easier to write integration tests. Well, you know my arguments by now.

Martin Hořeňovský: The Little Things: My ?radical? opinions about unit tests

Test Driven Development (TDD) is not useful for actual development.

In my experience, there are two options when I need to write a larger piece of code. The first one is that I know how to implement it; thus, I get no benefit from […] TDD. The second one is that I don't know how to implement something, and then TDD is still useless because what I actually need is […] up-front [design].

The research on Continuous Delivery says differently. The irony is that Hořeňovský is the creator of Catch2, a fairly well-known unit-test framework for C++. Hence, he should have a more substantiated view on TDD. The ultimate irony is that developers using TDD would be among the heaviest users of Catch2. Will I recommend Catch2 to others? Probably not.

In the 25 years of my career, I encountered hundreds of developers (myself included) who produced buggy code although they knew exactly how to implement things. Writing tests firsts (the Red step) gives you an early warning system that something went wrong.

Up-front design is a bad way to cut through complexity (things you “don’t know how to implement”). A better approach is to divide the problem into smaller problems that are easier to solve. TDD has this divide-and-conquer approach built in. The little test from the Red step addresses only a little part of the problem. By repeating the TDD cycle, you solve many little problems, which together solve the overall problem.

My Content

Applying TDD to Classes Accessing Files

Tests reading from or writing to files are not considered unit tests, because they are slow, they may introduce dependencies between tests, and they make it hard to check all normal, corner and error scenarios. I introduce a class TextFile that reads strings line by line from a real file in production code and from a string list in test code.

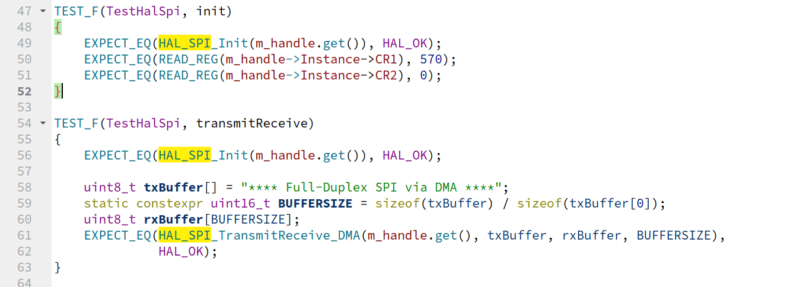

Applying TDD to HAL Drivers with Memory-Mapped I/O

One of my clients manufactures solid-state drives (SSDs). They asked me to get the HAL driver of their SSDs under test so that they can develop it with TDD. The unit tests run on PCs with 64-bit Intel processors. The code under test normally runs on 32-bit ARM microcontrollers, but must now run on the PCs. The driver code accesses hard-wired 32-bit addresses for memory-mapped I/O. I show two options how to deal with the 32-bit addresses on a 64-bit PC.

QML Engine Deletes C++ Objects Still In Use – Revisited with Address Sanitizers

This post garnered by far the most thank-you notes of all my posts. "I was about to give up. You saved my project!" - "You saved me weeks of debugging." I use address sanitizers to find places where the QML engine deletes objects that are still needed in the C++ parts of the application. Last month, I added a section how to enable address sanitizers for multiple targets (executables, libraries, tests, etc.) by setting two CMake variables: CMAKE_CXX_FLAGS_INIT and CMAKE_EXE_LINKER_FLAGS_INIT.